The video shows that X-Trainer used an imitation learning neural network+visual big language model, trained for 2 hours, and gained the ability to independently brush dishes, saving 70% of training time compared to the common training time.

From the plates with red food residues, sponges placed on yellow plates, and metal racks hanging behind the plates, deduce the task of cleaning and storing the plates in the metal racks.

Wipe three times without leaving any residual stains.

When the robot finished washing the dishes and was about to put them in the tray rack, it was suddenly interfered with by humans to dirty the dishes again. However, the robot quickly caught this change and immediately responded.

After the video was released, it sparked heated discussions among netizens and they are looking forward to the era of robots doing household chores!

Popular reviews from netizens

Some even joke that if humans keep causing trouble, will robots continue to brush and will they go on strike!

In fact, X-Trainer integrates the cutting-edge technologies of intelligent robots and AI, enabling robots to quickly imitate and learn complex human actions, ultimately achieving behavior cloning.

Lang Xulin, co-founder of Yuejiang Technology, stated that the series of actions of the X-Trainer in the video are derived from the end-to-end control of the imitation learning neural network, which is completely autonomous after training. The stability and speed of the robot have been significantly improved. The entire scheme adopts a visual big language model and an imitation learning neural network.

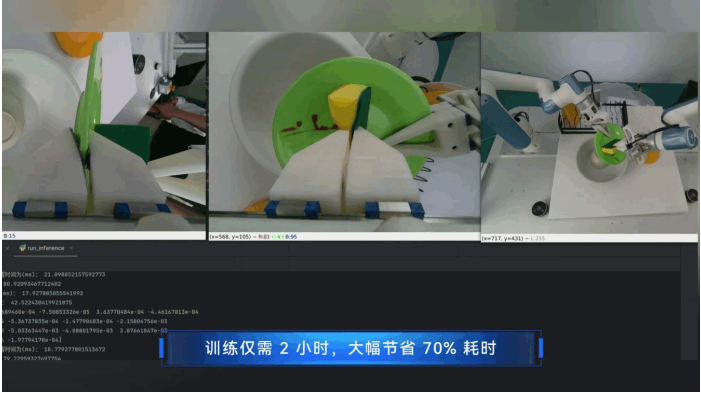

Firstly, the robot camera inputs the top image into the visual language model, and X-Trainer can complete the following tasks:

- Description of work scene [including dishes stained with food residue, sponges placed on yellow plates, and an iron frame behind the dishes, creating a kitchen scene]

- The visual model has implemented reasoning for tasks, such as cleaning dishes with food residue, placing sponges on yellow dishes, and placing metal racks behind dishes=cleaning dishes and storing them in metal racks

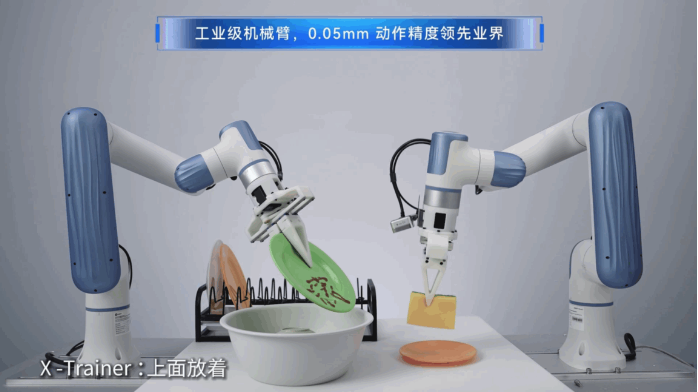

Regarding the operation of both arms, all actions are driven by an end-to-end neural network. The 25Hz frequency receives images from three cameras on the top and hands and completes inference. The 250Hz arm motion is generated through a high-performance online motion planning interface (according to public information, the network frequency for receiving airborne images in Figure 01 is 10Hz). The X-Trainer’s 25Hz end-to-end high-performance motion interface improves response speed by 150%, further improving the smoothness of the robot’s operation.

In January 2024, Figure 01 showed a video of making coffee and stated that the robot practiced these movements in an end-to-end manner, with a training time of 10 hours for the neural network. X-Trainer can autonomously brush dishes and quickly correct real-time interference in just 2 hours of training through human demonstration learning.

The high-speed training of X-Trainer benefits from the 0.05mm high-precision dual arms, which enable AI training robots to have industrial level data collection and action accuracy, greatly improving the efficiency and quality of completing tasks, and obtaining high-quality datasets for rapid training. This robotic arm is widely used in 3C manufacturing, commercial coffee shops, medical moxibustion and other fields as an industrial level robotic arm, which ensures the landing of the training scene.

Finally, Lang Xulin stated that the imitation learning neural network, end-to-end image to action mapping, training speed and quality are both rapidly developing and improving. Both Tesla and Figure demonstrate relevant technological achievements, with X-Trainer and X representing infinite training possibilities. The release of this training platform aims to help the development of embodied intelligence in China and provide a high-performance carrier for the landing of the artificial intelligence industry.

Embodied intelligence refers to an intelligent system based on the physical body for perception and action. It obtains information, understands problems, makes decisions, and implements actions through the interaction between intelligent agents and the environment, thereby generating intelligent behavior and adaptability. It is a key carrier for AI to achieve physical world interaction.

Collaborative robots are an important hardware carrier for embodied intelligence, unleashing even greater market space from industry to commerce.

Yuejiang Technology has deployed over 70000 robots globally, covering 100 countries and regions with product services, serving dozens of Fortune Global 500 companies such as Lixun Precision, BYD, Foxconn, Huawei, Toyota, Volkswagen, etc. Its export volume has been the highest for five consecutive years, and it has a rich foundation in embodied intelligence applications and landing scenarios.

Yuejiang Technology has always been committed to breakthroughs in AI+robotics technology and industrial landing. It has been rated by CB Insights as one of the 80 most valuable robotics enterprises in the world, and has established cooperative relationships with numerous artificial intelligence universities around the world, including Oxford University, Carnegie Mellon University, Massachusetts Institute of Technology, and Waseda University. It has taken the lead in undertaking the Guangdong Province Key Field R&D Program for Artificial Intelligence, which focuses on “autonomous learning of complex skills for multi degree of freedom intelligent agents, key components, and 3C manufacturing demonstration applications”. At the same time, as a national level specialized and innovative “little giant” enterprise, Yuejiang took the lead in undertaking the national key research and development plan for intelligent robots in 2022, and has applied for more than 1200 intellectual property rights. It has been recognized as a national advantage intellectual property enterprise, forming a targeted patent group layout in collaboration and core components of humanoid robots, electronic skins, remote operations, imitation learning, and other directions.

Warehouse special offer product recommendation:

PFSA103B 3BSE002487R1

PFSA103B PFSA103B STU

PFSA145APPR.3KVA PFSA145

PFSA145APPR.3KVA 3BSE008843R1

PFSA103D

PFSA103D 3BSE002492R0001

PFSA103C

PFSA103C STU3BSE002488R1

PFSA140

PFSA140 3BSE006503R1

More……